In-Depth Technical Guide to SQL Server 2025 T-SQL Enhancements

Introduction

It covers every major category—including new data types, AI/vector functions, advanced JSON and regex features, indexing improvements, query processing enhancements, security updates, streaming capabilities, and engine-level optimizations.Each section includes:

- What it is: A clear explanation of the new feature.

- Why it matters: Practical benefits for DBAs and developers.

- Practical example: Ready-to-run T-SQL scripts (enable preview features with:

ALTER DATABASE SCOPED CONFIGURATION SET PREVIEW_FEATURES = ON;

GOThis guide is designed for SQL Server professionals, database engineers, and architects who want a complete and practical reference to SQL Server 2025 improvements.

1. New Data Types

1.1 VECTOR Data Type

Practical example:

ALTER DATABASE SCOPED CONFIGURATION SET PREVIEW_FEATURES = ON;

GO

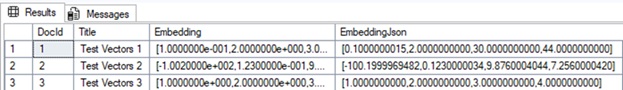

-- Create table with VECTOR column

CREATE TABLE dbo.Vectors (

DocId INT IDENTITY PRIMARY KEY,

Title NVARCHAR(200),

Embedding VECTOR(4) NOT NULL

);

GO

-- Insert sample data (embeddings as binary)

INSERT INTO dbo.Vectors (Title, Embedding)

VALUES ('Test Vectors 1', '[0.1, 2, 30, 44]'),

('Test Vectors 2', '[-100.2, 0.123, 9.876, 7.256]'),

('Test Vectors 3', JSON_ARRAY(1.0, 2.0, 3.0, 4.0));

GO

-- View as JSON for debugging

SELECT DocId, Title, CAST(Embedding AS NVARCHAR(MAX)) AS EmbeddingJson

FROM dbo.Vectors;

1.2 JSON Data Type

Practical example:

-- Create table with JSON column

CREATE TABLE Orders (

OrderId INT IDENTITY PRIMARY KEY,

OrderData JSON NOT NULL -- Native type, auto-validates

);

GO

-- Insert JSON

INSERT INTO Orders (OrderData)

VALUES ('{"customer":"John Doe","items":[{"name":"Laptop","price":999}],"total":999}');

GO

-- Query specific paths

SELECT OrderId, JSON_VALUE(OrderData, '$.customer') AS Customer,

JSON_QUERY(OrderData, '$.items') AS ItemsArray

FROM Orders

WHERE JSON_VALUE(OrderData, '$.total') > 500;

2. New Functions

2.1 Vector and AI Functions

| Function | Description |

|---|---|

| VECTOR_DISTANCE | Calculates the distance between two vectors using a specified distance metric. |

| VECTOR_SEARCH (preview) | Returns the closest vectors to a given query vector and distance metric using an approximate vector search algorithm. |

| VECTOR_NORM | Takes a vector as input and returns the norm (length/magnitude) using a specified norm type. |

| VECTOR_NORMALIZE | Returns the normalized vector scaled to length 1 according to the specified norm type. |

| VECTORPROPERTY | Returns specific structural or statistical properties of a given vector. |

Practical example:

DECLARE @v1 AS VECTOR(2) = '[25.220200126129058, 55.27695446131732]'; -- Dubai

DECLARE @v2 AS VECTOR(2) = '[41.011715197431464, 28.95562641991561]'; -- Istanbul

SELECT VECTOR_DISTANCE('euclidean', @v1, @v2) AS euclidean,

VECTOR_DISTANCE('cosine', @v1, @v2) AS cosine,

VECTOR_DISTANCE('dot', @v1, @v2) AS dot;

GO

DECLARE @v3 AS VECTOR(4);

DECLARE @v4 AS VECTOR(4);

SELECT @v3 = v.Embedding FROM dbo.Vectors AS v WHERE v.DocId = 1

SELECT @v4 = v.Embedding FROM dbo.Vectors AS v WHERE v.DocId = 2

SELECT VECTOR_DISTANCE('euclidean', @v3, @v4) AS euclidean,

VECTOR_DISTANCE('cosine', @v3, @v4) AS cosine,

VECTOR_DISTANCE('dot', @v3, @v4) AS dot;

GO

SELECT v.Embedding, VECTOR_NORMALIZE(Embedding, 'norm1') AS norm1

, VECTOR_NORMALIZE(Embedding, 'norm2') AS norm2

, VECTOR_NORMALIZE(Embedding, 'norminf') AS norminf

FROM dbo.Vectors AS v

GO

AI_GENERATE_EMBEDDINGS and AI_GENERATE_CHUNKS:

Calls external model for embeddings. Splits long text into chunks for embedding (new from previews).

Practical example:

-- Enable the external REST endpoint invocation on the database server

EXECUTE sp_configure 'external rest endpoint enabled', 1;

RECONFIGURE WITH OVERRIDE;

GO

-- Create a database master key

IF NOT EXISTS (SELECT *

FROM sys.symmetric_keys

WHERE [name] = '##MS_DatabaseMasterKey##')

BEGIN

CREATE MASTER KEY ENCRYPTION BY PASSWORD = N'';

END

GO

-- Create access credentials to Azure OpenAI using a key

CREATE DATABASE SCOPED CREDENTIAL [https://my-azure-openai-endpoint.cognitiveservices.azure.com/]

WITH IDENTITY = 'HTTPEndpointHeaders', secret = '{"api-key":"YOUR_AZURE_OPENAI_KEY"}';

GO

-- Create an external model to call the Azure OpenAI embeddings REST endpoint

CREATE EXTERNAL MODEL MyAzureOpenAIModel

WITH (

LOCATION = 'https://my-azure-openai-endpoint.cognitiveservices.azure.com/openai/deployments/text-embedding-ada-002/embeddings?api-version=2023-05-15',

API_FORMAT = 'Azure OpenAI',

MODEL_TYPE = EMBEDDINGS,

MODEL = 'text-embedding-ada-002',

CREDENTIAL = [https://my-azure-openai-endpoint.cognitiveservices.azure.com/]

);

GO

-- Create a table with text to chunk and insert data

CREATE TABLE textchunk

(

text_id INT IDENTITY (1, 1) PRIMARY KEY,

text_to_chunk NVARCHAR (MAX)

);

GO

INSERT INTO textchunk (text_to_chunk)

VALUES ('Your chenk text 1'),

('Your chenk text 2');

GO

-- Create a new table to hold the chunked text and vector embeddings

CREATE TABLE text_embeddings

(

embeddings_id INT IDENTITY (1, 1) PRIMARY KEY,

chunked_text NVARCHAR (MAX),

vector_embeddings VECTOR(1536)

);

GO

-- Insert the chunked text and vector embeddings into the text_embeddings table

-- using AI_GENERATE_CHUNKS and AI_GENERATE_EMBEDDINGS:

INSERT INTO text_embeddings (chunked_text, vector_embeddings)

SELECT c.chunk,

AI_GENERATE_EMBEDDINGS(c.chunk USE MODEL MyAzureOpenAIModel)

FROM textchunk AS t

CROSS APPLY AI_GENERATE_CHUNKS (

SOURCE = t.text_to_chunk,

CHUNK_TYPE = FIXED,

CHUNK_SIZE = 100

) AS c;

GO

-- View the results

SELECT *

FROM text_embeddings;

2.2 Regular Expression (RegEx) Functions

| Function | Description |

|---|---|

| REGEXP_LIKE | Returns a Boolean value that indicates whether the text input matches the regex pattern. |

| REGEXP_REPLACE | Returns a modified source string replaced by a replacement string, where occurrence of the regex pattern found. |

| REGEXP_SUBSTR | Extracts parts of a string based on a regular expression pattern. Returns Nth occurrence of a substring that matches the regex pattern. |

| REGEXP_INSTR | Returns the starting or ending position of the matched substring, depending on the option supplied. |

| REGEXP_COUNT | Returns a count of the number of times that regex pattern occurs in a string. |

| REGEXP_MATCHES | Returns a table of captured substring(s) that match a regular expression pattern to a string. If no match is found, the function returns no row. |

| REGEXP_SPLIT_TO_TABLE | Returns a table of strings split, delimited by the regex pattern. If there’s no match to the pattern, the function returns the string. |

All SARGable.

This implementation of regular expression is based on the RE2 regular expression library. For more information, visit RE2 Regular Expression Syntax

Practical example:

REGEXP_LIKE

Indicates if the regular expression pattern matches in a string.

REGEXP_LIKE requires database compatibility level 170 and above. If the database compatibility level is lower than 170, REGEXP_LIKE isn’t available.

USE AdventureWorks2022

GO

ALTER DATABASE AdventureWorks2022 SET COMPATIBILITY_LEVEL = 170;

GO

-- Select all records from the Person table where the first name starts with R and ends with b

SELECT *

FROM [Person].[Person] AS p

WHERE REGEXP_LIKE (p.FIRSTNAME, '^R.*b$');

-- Select all records from the Person table where the first name starts with A and ends with Y, using case-insensitive mode

SELECT *

FROM [Person].[Person] AS p

WHERE REGEXP_LIKE (p.FIRSTNAME, '^R.*B$', 'i');

-- Select all records from the Products table where the product name contains at least one consecutive vowels:

SELECT *

FROM [Production].[Product] AS p

WHERE REGEXP_LIKE (p.NAME, '[AEIOU]{1,}');

-- Create an employees table with CHECK constraints for the Email and Phone_Number columns:

DROP TABLE IF EXISTS Employees;

CREATE TABLE Employees

(

ID INT IDENTITY (101, 1),

[Name] VARCHAR (150),

Email VARCHAR (320)

CHECK (REGEXP_LIKE (Email, '^[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Za-z]{2,}$')),

Phone_Number NVARCHAR (20)

CHECK (REGEXP_LIKE (Phone_Number, '^(\d{3})-(\d{3})-(\d{4})$'))

);

REGEXP_REPLACE

Returns a modified source string replaced by a replacement string, where the occurrence of the regular expression pattern found. If no matches are found, the function returns the original string.

-- Replace all occurrences of a or e with - in the product names.

SELECT p.NAME, REGEXP_REPLACE(p.NAME, '[ae]', '-', 1, 0, 'i')

FROM [Production].[Product] AS p;

-- Replace the first occurrence of cat or dog with pet in the product descriptions

SELECT pd.DESCRIPTION, REGEXP_REPLACE(pd.DESCRIPTION, 'cat|dog', 'pet', 1, 1, 'i')

FROM [Production].[ProductDescription] AS pd;

-- Replace the last four digits of the phone numbers with asterisks

SELECT pp.PHONENUMBER, REGEXP_REPLACE(pp.PHONENUMBER, '\d{4}$', '****')

FROM [Person].[PersonPhone] AS pp;

REGEXP_SUBSTR

Returns one occurrence of a substring of a string that matches the regular expression pattern. If no match is found, it returns NULL.

-- Extract the domain name from an email address.

SELECT ea.EmailAddress, REGEXP_SUBSTR(ea.EmailAddress, '@(.+)$', 1, 1, 'i', 1) AS DOMAIN

FROM [Person].[EmailAddress] AS ea;

-- Find the first word in a sentence that starts with a vowel.

SELECT pd.DESCRIPTION, REGEXP_SUBSTR(pd.DESCRIPTION, '\b[aeiou]\w*', 1, 1, 'i') AS WORD

FROM [Production].[ProductDescription] AS pd;

-- Get the last four digits of a credit card number.

SELECT cc.CARDNUMBER, REGEXP_SUBSTR(cc.CARDNUMBER, '\d{4}$') AS LAST_FOUR

FROM [Sales].[CreditCard] AS cc;

REGEXP_INSTR

Returns the starting or ending position of the matched substring, depending on the value of the return_option argument.

-- Find the position of the first substring that contains only digits in the DESCRIPTION column.

SELECT pd.DESCRIPTION, REGEXP_INSTR(pd.DESCRIPTION, '\d+')

FROM [Production].[ProductDescription] AS pd;

-- Find the position of the third occurrence of the letter a (case-insensitive) in the NAME column.

SELECT p.NAME, REGEXP_INSTR(p.NAME, 'a', 1, 3, 0, 'i')

FROM [Production].[Product] AS p;

-- Find the position of the end of the first substring that starts with t and ends with e (case-sensitive) in the DESCRIPTION column.

SELECT pd.DESCRIPTION, REGEXP_INSTR(pd.DESCRIPTION, 't.*?e', 1, 1, 1)

FROM [Production].[ProductDescription] AS pd;

REGEXP_COUNT

Counts the number of times that a regular expression pattern is matched in a string.

-- Count how many times the letter a appears in each product name.

SELECT p.NAME, REGEXP_COUNT(p.NAME, 'a') AS A_COUNT

FROM [Production].[Product] AS p;

-- Count how many products have a name that ends with ing.

SELECT COUNT(*)

FROM [Production].[Product] AS p

WHERE REGEXP_COUNT(p.NAME, 'ing$') > 0;

-- Count how many products have a name that contains three consecutive consonants, ignoring case.

SELECT COUNT(*)

FROM [Production].[Product] AS p

WHERE REGEXP_COUNT(p.NAME, '[^aeiou]{3}', 1, 'i') > 0;

REGEXP_MATCHES

Returns a table of captured substrings that match a regular expression pattern to a string. If no match is found, the function returns no row.

-- Return tabular results from 'Learning #AzureSQL #AzureSQLDB' that start with a # character

-- followed by one or more alphanumeric characters (A-Z, a-z, 0-9) or underscores (_).

SELECT *

FROM REGEXP_MATCHES ('Learning #AzureSQL #AzureSQLDB', '#([A-Za-z0-9_]+)');

-- Return strings from ABC that match strings that start with the letter A followed by exactly two characters.

SELECT *

FROM REGEXP_MATCHES ('ABC', '^(A)(..)$');

REGEXP_SPLIT_TO_TABLE

Returns a table of strings split, delimited by the regex pattern. If there’s no match to the pattern, the function returns the string.

-- Return a table split for the quick brown fox jumps over the lazy dog.

SELECT *

FROM REGEXP_SPLIT_TO_TABLE ('the quick brown fox jumps over the lazy dog', '\s+');

2.3 JSON Functions

| Function | Description |

|---|---|

| ISJSON | Tests whether a string contains valid JSON. |

| JSON_ARRAY | Constructs JSON array text from zero or more expressions. |

| JSON_ARRAYAGG* | Constructs a JSON array from an aggregation of SQL data or columns. |

| JSON_MODIFY | Updates the value of a property in a JSON string and returns the updated JSON string. |

| JSON_OBJECT | Constructs JSON object text from zero or more expressions. |

| JSON_OBJECTAGG* | Constructs a JSON object from an aggregation of SQL data or columns. |

| JSON_PATH_EXISTS | Tests whether a specified SQL/JSON path exists in the input JSON string. |

| JSON_QUERY | Extracts an object or an array from a JSON string. |

| JSON_VALUE | Extracts a scalar value from a JSON string. |

| OPENJSON | Parses JSON text and returns objects and properties from the JSON input as rows and columns. |

Practical example:

JSON_OBJECTAGG

The JSON_OBJECTAGG syntax constructs a JSON object from an aggregation of SQL data or columns. JSON_OBJECTAGG can also be used in a SELECT statement with GROUP BY GROUPING SETS clause.

-- Return JSON object with one key

-- The following example returns a JSON object with one key and null value.

SELECT JSON_OBJECTAGG('key':NULL);

-- Construct JSON object from result set

-- The following example constructs a JSON object with three properties from a result set.

SELECT JSON_OBJECTAGG(c1:c2)

FROM (

VALUES('key1', 'c'), ('key2', 'b'), ('key3', 'a')

) AS t(c1, c2);

-- Return result with two columns

-- The following example returns a result with two columns. The first column contains the object_id value. The second column contains a JSON object where the key is the column name and value is the column_id.

SELECT TOP (5) c.object_id,

JSON_OBJECTAGG(c.name:c.column_id) AS columns

FROM sys.columns AS c

GROUP BY c.object_id;

-- Return a JSON object as JSON type

-- The following example returns a JSON object as json type.

SELECT JSON_OBJECTAGG('a':1 RETURNING JSON);

-- Return aggregated result with four columns

-- The following example returns a result with four columns from a SELECT statement

-- containing SUM and JSON_OBJECTAGG aggregates with GROUP BY GROUPING SETS.

-- The first two columns return the id and type column value. The third column total_amount

-- returns the value of SUM aggregate on the amount column. The fourth column json_total_name_amount

-- returns the value of JSON_OBJECTAGG aggregate on the name and amount columns.

;WITH T AS (

SELECT *

FROM (

VALUES (1, 'k1', 'a', 2),

(1, 'k2', 'b', 3),

(1, 'k3', 'b', 4),

(2, 'j1', 'd', 7),

(2, 'j2', 'd', 9)

) AS b(id, name, type, amount)

)

SELECT id,

type,

SUM(amount) AS total_amount,

JSON_OBJECTAGG(name:amount) AS json_total_name_amount

FROM T

GROUP BY GROUPING SETS((id), (type), (id, type), ());

JSON_ARRAYAGG

Constructs a JSON array from an aggregation of SQL data or columns. JSON_ARRAYAGG can also be used in a SELECT statement with GROUP BY GROUPING SETS clause.

-- The following example returns an empty JSON array.

SELECT JSON_ARRAYAGG(NULL);

--The following example constructs a JSON array with three elements from a result set.

SELECT JSON_ARRAYAGG(c1)

FROM (VALUES ('c'), ('b'), ('a')) AS t(c1);

-- The following example constructs a JSON array with three elements ordered by the value of the column.

SELECT JSON_ARRAYAGG( c1 ORDER BY c1)

FROM (

VALUES ('c'), ('b'), ('a')

) AS t(c1);

-- The following example returns a result with two columns. The first column contains the object_id value.

-- The second column contains a JSON array containing the names of the columns. The columns in the

-- JSON array are ordered based on the column_id value.

SELECT TOP(5) c.object_id, JSON_ARRAYAGG(c.name ORDER BY c.column_id) AS column_list

FROM sys.columns AS c

GROUP BY c.object_id;

-- The following example returns a result with four columns from a SELECT statement

-- containing SUM and JSON_ARRAYAGG aggregates with GROUP BY GROUPING SETS.

-- The first two columns return the id and type column value. The third column total_amount

-- returns the value of SUM aggregate on the amount column. The fourth column json_total_amount

-- returns the value of JSON_ARRAYAGG aggregate on the amount column.

; WITH T AS (

SELECT *

FROM (VALUES (1, 'k1', 'a', 2),

(1, 'k2', 'b', 3),

(1, 'k3', 'b', 4),

(2, 'j1', 'd', 7),

(2, 'j2', 'd', 9)) AS b(id, name, type, amount)

)

SELECT id,

type,

SUM(amount) AS total_amount,

JSON_ARRAYAGG(amount) AS json_total_amount

FROM T

GROUP BY GROUPING SETS((id), (type), (id, type), ());

-- The following example returns a JSON array as json type.

SELECT JSON_ARRAYAGG(1 RETURNING JSON);

2.4 Fuzzy Matching Functions

| Function | Description |

|---|---|

| EDIT_DISTANCE | Calculates the number of insertions, deletions, substitutions, and transpositions needed to transform one string to another. |

| EDIT_DISTANCE_SIMILARITY | Calculates a similarity value ranging from 0 (indicating no match) to 100 (indicating full match). |

| JARO_WINKLER_DISTANCE | Calculates the edit distance between two strings giving preference to strings that match from the beginning for a set prefix length. |

| JARO_WINKLER_SIMILARITY | Calculates a similarity value ranging from 0 (indicating no match) to 100 (indicating full match). |

Practical example:

-- The following example compares two words and returns the

-- EDIT_DISTANCE() value as a column, named Distance.

SELECT 'Colour' AS WordUK,

'Color' AS WordUS,

EDIT_DISTANCE('Colour', 'Color') AS Distance;

-- The following example compares two words and returns

-- the EDIT_DISTANCE_SIMILARITY() value as a column, named Distance.

SELECT 'Colour' AS WordUK,

'Color' AS WordUS,

EDIT_DISTANCE_SIMILARITY('Colour', 'Color') AS Distance;

-- The following example compares two words and returns the

-- JARO_WINKLER_DISTANCE value as a column, named Distance.

SELECT 'Colour' AS WordUK,

'Color' AS WordUS,

JARO_WINKLER_DISTANCE('Colour', 'Color') AS Distance;

-- The following example compares two words and returns the

-- JARO_WINKLER_SIMILARITY value as a column, named Similarity.

SELECT 'Colour' AS WordUK,

'Color' AS WordUS,

JARO_WINKLER_SIMILARITY('Colour', 'Color') AS Similarity;

2.5 String, Math, and Date Functions

| Function | Description |

|---|---|

| UNISTR | UNISTR provides support for Unicode string literals by letting you specify the Unicode encoding value of characters in the string. UNISTR returns the Unicode characters corresponding to the input expression, as defined by the Unicode standard. |

| PRODUCT | The PRODUCT function returns the product of all the values, or only the DISTINCT values, in an expression. Use with numeric columns only. Null values are ignored. |

| CURRENT_DATE | This function returns the current database system date as a date value, without the database time and time zone offset. CURRENT_DATE derives this value from the underlying operating system on the Database Engine runs. |

| SUBSTRING | with optional length |

| DATEADD | with bigint |

| DATETRUNC | The DATETRUNC function returns an input date truncated to a specified datepart |

Practical example:

-- Use UNISTR vs the NCHAR function

-- The following examples all use the UNISTR functions to perform the necessary conversion

-- of the Unicode values into string character set, to display the Unicode character Smiling

-- Face With Open Mouth. The database collation must be a UTF-8 collation if the input is of

-- char or varchar data types.

SELECT N'Hello! ' + NCHAR(0xd83d) + NCHAR(0xde00);

SELECT UNISTR(N'Hello! \D83D\DE00');

SELECT UNISTR(N'Hello! \+01F603');

-- Use UNISTR function with user defined escape character

-- The following example uses the UNISTR function with a custom escape character to perform

-- the necessary conversion of the Unicode into a string character set.

SELECT UNISTR(N'ABC#00C0#0181#0187', '#');

-- Use UNISTR function by combining string literals and Unicode code points

-- In the following example, UNISTR is used with a user-defined escape character ($) and a varchar

-- data type with UTF-8 collation. It combines string literals with a Unicode codepoint value:

SELECT UNISTR('I $2665 Azure SQL.' COLLATE Latin1_General_100_CI_AS_KS_SC_UTF8, '$');

-- Use UNISTR function for characters beyond the UTF-8 limit

-- If you need to use a character set beyond UTF-8, you must convert the character sequence

-- to UTF-8 using the COLLATE clause. Here's an example:

SELECT UNISTR('\306F\3044' COLLATE Latin1_General_100_BIN2_UTF8) AS Yes_in_Japanese_Hiragana;

-- Multiply rows together

-- The following example uses the PRODUCT function:

SELECT PRODUCT(CAST(pod.OrderQty AS DECIMAL(38, 3))) AS ProductOfPrices

, COUNT(*)

FROM Purchasing.PurchaseOrderDetail AS pod

WHERE ModifiedDate BETWEEN '2014-07-01' AND '2015-05-26'

GROUP BY pod.PurchaseOrderID

-- Use the OVER clause

-- The following example uses the PRODUCT function with the OVER clause to provide a rate of

-- return on hypothetical financial instruments. The data is partitioned by finInstrument.

SELECT finInstrument,

PRODUCT(1 + rateOfReturn) OVER (PARTITION BY finInstrument) AS CompoundedReturn

FROM (VALUES (0.1626, 'instrumentA'),

(0.0483, 'instrumentB'),

(0.2689, 'instrumentC'),

(-0.1944, 'instrumentA'),

(0.2423, 'instrumentA')

) AS MyTable(rateOfReturn, finInstrument);

-- Get the current system date and time

SELECT SYSDATETIME(),

SYSDATETIMEOFFSET(),

SYSUTCDATETIME(),

CURRENT_TIMESTAMP,

GETDATE(),

GETUTCDATE(),

CURRENT_DATE;

-- Get the current system date

SELECT CONVERT (DATE, SYSDATETIME()),

CONVERT (DATE, SYSDATETIMEOFFSET()),

CONVERT (DATE, SYSUTCDATETIME()),

CONVERT (DATE, CURRENT_TIMESTAMP),

CONVERT (DATE, GETDATE()),

CONVERT (DATE, GETUTCDATE()),

CURRENT_DATE;

-- The following example shows how to return only a part of a character string.

-- From the sys.databases table, this query returns the system database names in the first column,

-- the first letter of the database in the second column, and the third and fourth characters

-- in the final column.

SELECT name,

SUBSTRING(name, 1, 1) AS Initial,

SUBSTRING(name, 3, 2) AS ThirdAndFourthCharacters

FROM sys.databases

WHERE database_id < 5;

-- Use SUBSTRING without a length argument to find replacement parts in AdventureWorks2022 inventory

SELECT [ProductDescriptionID],

[Description],

SUBSTRING([Description], LEN('Replacement') + 1) AS [Replacement-Part]

FROM [Production].[ProductDescription]

WHERE [Description] LIKE 'Replacement%';

-- The following examples illustrate the use of various datepart options:

DECLARE @d datetime2 = '2021-12-08 11:30:15.1234567';

SELECT 'Year', DATETRUNC(year, @d);

SELECT 'Quarter', DATETRUNC(quarter, @d);

SELECT 'Month', DATETRUNC(month, @d);

SELECT 'Week', DATETRUNC(week, @d); -- Using the default DATEFIRST setting value of 7 (U.S. English)

SELECT 'Iso_week', DATETRUNC(iso_week, @d);

SELECT 'DayOfYear', DATETRUNC(dayofyear, @d);

SELECT 'Day', DATETRUNC(day, @d);

SELECT 'Hour', DATETRUNC(hour, @d);

SELECT 'Minute', DATETRUNC(minute, @d);

SELECT 'Second', DATETRUNC(second, @d);

SELECT 'Millisecond', DATETRUNC(millisecond, @d);

SELECT 'Microsecond', DATETRUNC(microsecond, @d);

3. Window Functions

4. Indexing

4.1 Vector Index (DISKANN)

Practical example:

The following code block creates mock embeddings with the following steps:

- Enables the trace flag, necessary in the current preview.

- Create a sample table dbo.Articles with a column embedding with data type vector(5).

- Insert sample data with mock embedding data.

- Create a vector index on dbo.Articles.embedding.

- Demonstrate the vector similarity search with the VECTOR_SEARCH() function.

-- Step 0: Enable Preview Feature

ALTER DATABASE SCOPED CONFIGURATION SET PREVIEW_FEATURES = ON;

GO

-- Step 1: Create a sample table with a VECTOR(5) column

CREATE TABLE dbo.Articles

(

id INT PRIMARY KEY,

title NVARCHAR(100),

content NVARCHAR(MAX),

embedding VECTOR(5)

);

-- Step 2: Insert sample data

INSERT INTO Articles (id, title, content, embedding)

VALUES

(1, 'Intro to AI', 'This article introduces AI concepts.', '[0.1, 0.2, 0.3, 0.4, 0.5]'),

(2, 'Deep Learning', 'Deep learning is a subset of ML.', '[0.2, 0.1, 0.4, 0.3, 0.6]'),

(3, 'Neural Networks', 'Neural networks are powerful models.', '[0.3, 0.3, 0.2, 0.5, 0.1]'),

(4, 'Machine Learning Basics', 'ML basics for beginners.', '[0.4, 0.5, 0.1, 0.2, 0.3]'),

(5, 'Advanced AI', 'Exploring advanced AI techniques.', '[0.5, 0.4, 0.6, 0.1, 0.2]');

-- Step 3: Create a vector index on the embedding column

CREATE VECTOR INDEX vec_idx

ON Articles(embedding)

WITH (metric = 'cosine', type = 'diskann');

-- Step 4: Perform a vector similarity search

DECLARE @qv VECTOR(5) = '[0.3, 0.3, 0.3, 0.3, 0.3]';

SELECT t.id,

t.title,

t.content,

s.distance

FROM VECTOR_SEARCH(table = Articles AS t,

column = embedding,

similar_to = @qv,

metric = 'cosine',

top_n = 3) AS s

ORDER BY s.distance, t.title;

4.2 JSON Path Index

Practical example:

DROP TABLE IF EXISTS docs;

CREATE TABLE docs

(

id INT PRIMARY KEY,

content JSON

);

GO

-- Create a JSON index on a JSON column

DROP INDEX IF EXISTS json_content_index ON docs

CREATE JSON INDEX json_content_index

ON docs (content);

GO

-- Create a JSON index on a JSON column with specific paths

DROP INDEX IF EXISTS json_content_index ON docs

CREATE JSON INDEX json_content_index

ON docs (content)

FOR ('$.a', '$.b')

WITH (FILLFACTOR = 80);

GO

-- JSON index with array search optimization

-- The following example returns JSON indexes for the table dbo.Customers.

-- The JSON index is created with the array search optimization option enabled.

DROP TABLE IF EXISTS dbo.Customers;

CREATE TABLE dbo.Customers

(

customer_id INT IDENTITY PRIMARY KEY,

customer_info JSON NOT NULL

);

GO

CREATE JSON INDEX CustomersJsonIndex

ON dbo.Customers (customer_info) WITH (OPTIMIZE_FOR_ARRAY_SEARCH = ON);

GO

INSERT INTO dbo.Customers (customer_info)

VALUES ('{"name":"customer1", "email": "customer1@example.com", "phone":["123-456-7890", "234-567-8901"]}');

GO

SELECT object_id,

index_id,

optimize_for_array_search

FROM sys.json_indexes AS ji

WHERE object_id = OBJECT_ID('dbo.Customers');

5. Tables and Structures

5.1 Native Support in Tables

Practical example:

See data type examples above.

5.2 Change Event Streaming (CES)

Practical example:

Enable streaming on a table

-- Enable Change Tracking for a Database

ALTER DATABASE AdventureWorks2022

SET CHANGE_TRACKING = ON

(CHANGE_RETENTION = 2 DAYS, AUTO_CLEANUP = ON)

GO

-- Enable Change Tracking for a Table

ALTER TABLE Person.Person

ENABLE CHANGE_TRACKING

WITH (TRACK_COLUMNS_UPDATED = ON)

GO

-- Disable Change Tracking for a Table or Database

ALTER DATABASE AdventureWorks2022

SET CHANGE_TRACKING = OFF

GO

ALTER TABLE Person.Person

DISABLE CHANGE_TRACKING;

GO

-- Use the change tracking functions to obtain changes

-- To obtain the changed rows for a table and information about the changes,

-- use CHANGETABLE(CHANGES...). For example, the following query obtains changes

-- for the SalesLT.Product table.

DECLARE @last_synchronization_version bigint;

-- Obtain the current synchronization version. This will be used next time that changes are obtained.

SET @last_synchronization_version = CHANGE_TRACKING_CURRENT_VERSION();

SELECT CT.BusinessEntityID, CT.SYS_CHANGE_OPERATION,

CT.SYS_CHANGE_COLUMNS, CT.SYS_CHANGE_CONTEXT

FROM CHANGETABLE(CHANGES Person.Person, @last_synchronization_version) AS CT;

GO

DECLARE @last_synchronization_version bigint;

SELECT

CT.BusinessEntityID, P.FirstName, P.LastName, P.Title,

CT.SYS_CHANGE_OPERATION, CT.SYS_CHANGE_COLUMNS,

CT.SYS_CHANGE_CONTEXT

FROM Person.Person AS P

RIGHT OUTER JOIN CHANGETABLE(CHANGES Person.Person, @last_synchronization_version) AS CT

ON CT.BusinessEntityID = P.BusinessEntityID;

-- View changes via system views

SELECT *

FROM sys.change_tracking_tables

WHERE object_id = OBJECT_ID('Person');

Streams include schema, old/new values – ideal for zero-ETL to Fabric.

5.3 REST Endpoint Invocation

Practical example:

Response of the HTTP call and the resulting data sent back by the invoked endpoint is available through the @response output parameter. @response might contain a JSON document with the following schema:

"response": {

"status": {

"http": {

"code": 200,

"description": "OK"

}

},

"headers": {

"Date": "Thu, 08 Sep 2022 21:51:22 GMT",

"Content-Length": "1345",

"Content-Type": "application\/json; charset=utf-8",

"Server": "Kestrel",

"Strict-Transport-Security": "max-age=31536000; includeSubDomains"

}

}

Or the @response might contain an XML document with the following schema:

<response>

<status>

<http code="200" description="OK" />

</status>

<headers>

<header key="Date" value="Tue, 01 Apr 1976 21:12:04 GMT" />

<header key="Content-Length" value="2112" />

<header key="Content-Type" value="application/xml" />

<header key="Server" value="Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0" />

<header key="x-ms-request-id" value="31536000-64bi-64bi-64bi-31536000" />

<header key="x-ms-version" value="2021-10-04" />

<header key="x-ms-creation-time" value="Wed, 19 Apr 2023 22:17:33 GMT" />

<header key="x-ms-server-encrypted" value="true" />

</headers>

</response>-- Requires EXECUTE ANY EXTERNAL ENDPOINT database permission.

GRANT EXECUTE ANY EXTERNAL ENDPOINT TO [];

GO

-- To enable the sp_invoke_external_rest_endpoint stored procedure in SQL Server 2025 (17.x)

-- and Azure SQL Managed Instance, run the following T-SQL code:

EXECUTE sp_configure 'external rest endpoint enabled', 1;

RECONFIGURE WITH OVERRIDE;

GO

-- The following example calls an Azure Function using an HTTP trigger binding allowing anonymous access.

DECLARE @ret AS INT, @response AS NVARCHAR (MAX);

EXECUTE

@ret = sp_invoke_external_rest_endpoint

@url = N'https://.azurewebsites.net/api/?key1=value1',

@headers = N'{"header1":"value_a", "header2":"value2", "header1":"value_b"}',

@payload = N'{"some":{"data":"here"}}',

@response = @response OUTPUT;

SELECT @ret AS ReturnCode,

@response AS Response;

GO

-- This example reads a file from Azure Blob Storage using a SAS token for authentication. The results are returned in XML, so you must use the header "Accept":"application/xml".

DECLARE @ret AS INT, @response AS NVARCHAR (MAX);

EXECUTE

@ret = sp_invoke_external_rest_endpoint

@url = N'https://blobby.blob.core.windows.net/datafiles/my_favorite_blobs.txt?sp=r&st=2023-07-28T19:56:07Z&se=2023-07-29T03:56:07Z&spr=https&sv=2022-11-02&sr=b&sig=XXXXXX1234XXXXXX6789XXXXX',

@headers = N'{"Accept":"application/xml"}',

@method = 'GET',

@response = @response OUTPUT;

SELECT @ret AS ReturnCode,

@response AS Response;

GO

Using Azure SQL Database you can use OpenAI:

-- Enable the external scripts invocation on the database server

EXEC sp_configure 'external scripts enabled', 1;

RECONFIGURE;

GO

-- Create a database master key

IF NOT EXISTS (SELECT *

FROM sys.symmetric_keys

WHERE [name] = '##MS_DatabaseMasterKey##')

CREATE MASTER KEY ENCRYPTION BY PASSWORD = N'YourStrongPassword123!';

ELSE

OPEN MASTER KEY DECRYPTION BY PASSWORD = 'YourStrongPassword123!';

GO

-- Create access credentials to OpenAI using a key

DROP DATABASE SCOPED CREDENTIAL OpenAI_Credential

CREATE DATABASE SCOPED CREDENTIAL OpenAI_Credential

WITH

IDENTITY = 'HTTPEndpointHeaders',

SECRET = '{"Authorization":"Bearer sk-proj-....bugG4jez2wFgRtA-AA"}';

GO

EXEC sys.sp_invoke_external_rest_endpoint

@url = 'https://api.openai.com/v1/chat/completions',

@method = 'POST',

@credential = 'OpenAI_Credential',

@payload = '{

"model": "gpt-4o-mini",

"messages": [

{"role": "user", "content": "Hello from SQL Server"}

]

}';

6. Core Engine Improvements for T-SQL

- Better cardinality estimation for complex expressions,

- Optional Parameter Plan Optimization (OPPO) to mitigate parameter sniffing,

- expanded DOP feedback,

- adaptive memory grants,

- improved locking (TID/LAQ to reduce blocking),

- faster parallel redo,

- and 20-50% speedups on big joins/windows.

Practical example:

-- Stored proc with variable param – OPPO generates multiple plans on first runs

CREATE PROC GetSales @MinAmount INT

AS

SELECT *

FROM Sales.SalesOrderDetail AS sod WHERE sod.OrderQty > @MinAmount;

GO

-- Builds optimized plans for different values

EXEC GetSales 500;

-- No more bad cached plans from sniffing!

7. Security & Encryption Enhancements

Practical example:

-- Create conditional mask

ALTER TABLE [Person].[EmailAddress]

ALTER COLUMN [EmailAddress]

ADD MASKED WITH (FUNCTION = 'partial(1,"xxx",0)');

CREATE USER TestUser WITHOUT LOGIN;

GRANT SELECT ON Person.EmailAddress TO TestUser;

GO

EXECUTE AS USER = 'TestUser';

SELECT EmailAddress FROM Person.EmailAddress;

REVERT;

For Always Encrypted: Query encrypted cols directly with new ops.

8. TDS & Query Store Improvements

Practical example:

Query Store on secondary

-- Enable on readable secondary

ALTER DATABASE AdventureWorks2022

SET QUERY_STORE = ON (OPERATION_MODE = READ_WRITE);

-- Analyze regressions

SELECT *

FROM sys.query_store_runtime_stats

WHERE avg_duration > 1000000; -- Flags slow queries, including vector ones

TDS 8.0: Use in ODBC for PolyBase – auto-encrypts vector data transfers.

9. Other T-SQL Changes

String Concat || :

SELECT 'Hello' || ' World'; -- ANSI standard.Query Optimizations:

ABORT_QUERY_EXECUTION:

Practical example:

IF @@ROWCOUNT > 100000

ABORT_QUERY_EXECUTION;

-- in procs.

10. External AI Model Management (Bonus T-SQL Feature)

Practical example:

-- Step 1: Enable developer preview features on SQL Server 2025

ALTER DATABASE SCOPED CONFIGURATION

SET PREVIEW_FEATURES = ON;

-- Step 2: Enable the local AI runtime on SQL Server 2025

EXECUTE sp_configure 'external AI runtimes enabled', 1;

RECONFIGURE WITH OVERRIDE;

-- Step 3: Set up the ONNX Runtime library

PowerShell:

cd C:\

mkdir onnx_runtime

-- Step 4: Set up the tokenization library

-- Download and build the tokenizers-cpp library from GitHub.

-- Once the dll is created, place the tokenizer in the C:\onnx_runtime directory.

-- Step 5: Download the ONNX model

-- Start by creating the model directory in C:\onnx_runtime\.

PowerShell:

cd C:\onnx_runtime

mkdir model

cd C:\onnx_runtime\model

git clone https://huggingface.co/nsense/all-MiniLM-L6-v2-onnx

-- Step 6: Set directory permissions

-- Use the following PowerShell script to provide the MSSQLLaunchpad

-- user access to the ONNX Runtime directory:

PowerShell:

$AIExtPath = "C:\onnx_runtime";

$Acl = Get-Acl -Path $AIExtPath

$AccessRule = New-Object System.Security.AccessControl.FileSystemAccessRule("MSSQLLaunchpad", "FullControl", "ContainerInherit,ObjectInherit", "None","Allow")

$Acl.AddAccessRule($AccessRule)

Set-Acl -Path $AIExtPath -AclObject $Acl

-- Step 7: Create the external model

-- Run the following query to register your ONNX model as an external model object:

CREATE EXTERNAL MODEL myLocalOnnxModel

WITH (

LOCATION = 'C:\onnx_runtime\model\all-MiniLM-L6-v2-onnx',

API_FORMAT = 'ONNX Runtime',

MODEL_TYPE = EMBEDDINGS,

MODEL = 'allMiniLM',

PARAMETERS = '{"valid":"JSON"}',

LOCAL_RUNTIME_PATH = 'C:\onnx_runtime\'

);

-- Step 8: Generate embeddings

-- Use the ai_generate_embeddings function to test the model by running the following query:

SELECT AI_GENERATE_EMBEDDINGS(N'Test Text' USE MODEL myLocalOnnxModel);